- #How to install pyspark on windows 10 how to#

- #How to install pyspark on windows 10 full version#

- #How to install pyspark on windows 10 windows#

I later guessed that this could have been caused by the filesystem type (exFAT). Modifying these permissions still did not yield result, the error persisted.

#How to install pyspark on windows 10 windows#

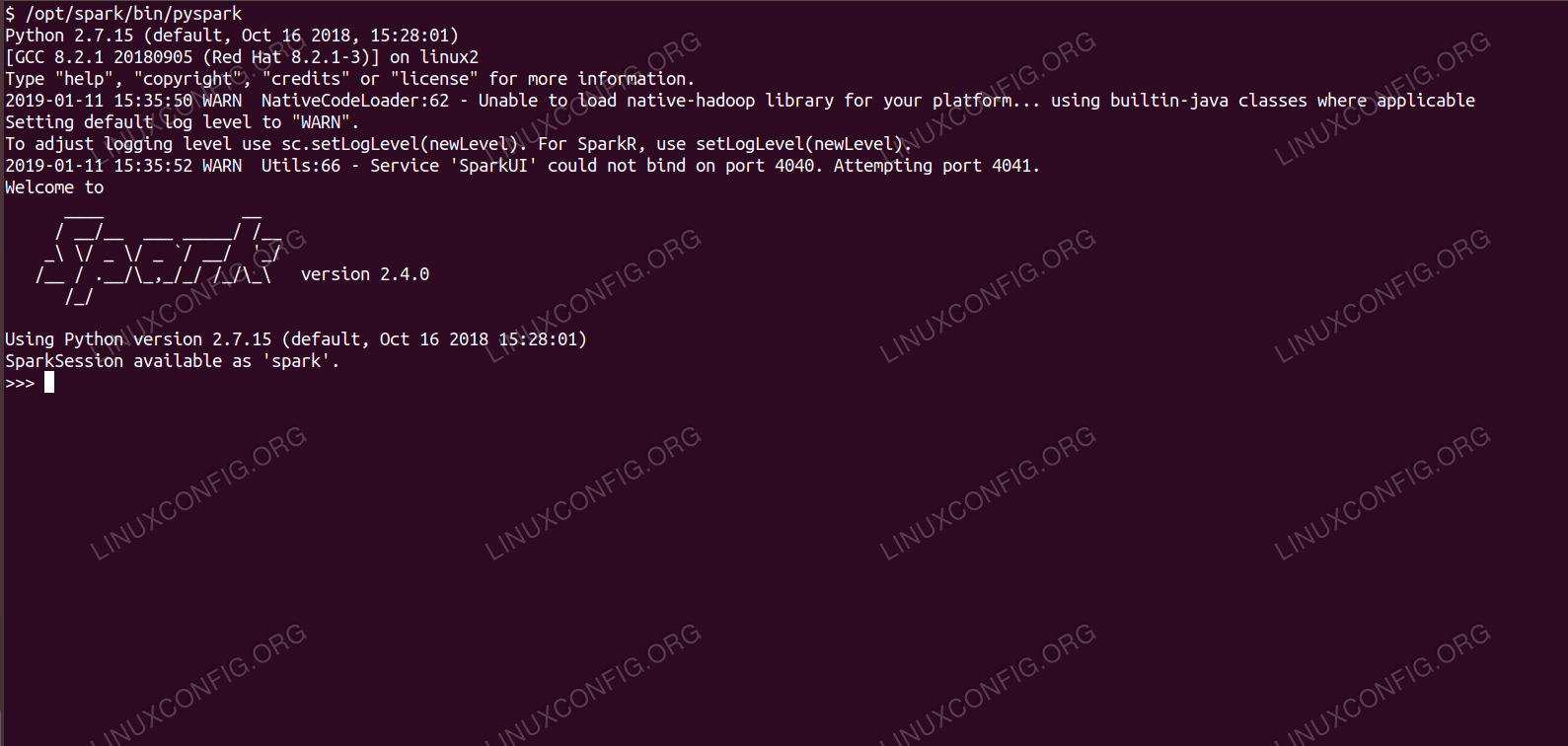

Jupyter/pyspark-notebook create notebook failed permission denied windows This can be fixed simply by removing the stopped container: docker rm You have to remove (or rename) that container to be able to reuse that name. First, a typical situation after restarting the container:ĭo cker: Error response from daemon: Conflict. Now, some errors you are likely to experience. You will find it by typing: docker logs spark. If that is the case, then direct the browser. Note: you can also run the container in the detached mode (-d). You are now in the Jupyter session, which is inside the docker container so you can access the Spark there. Copy and paste the Jupyter notebook token handle to your local browser, replacing the host address with ‘ localhost‘. The -p option is for ports you will need mapped.Īs you’ll note in the logs, the Jupyter container is being started inside the image. The directory might be empty, you will need to put some files later. This directory will be accessed by the container, that’s what option -v is for. Replace ” D:\ sparkMounted” with your local working directory. Installing and running Spark & connecting with JupyterĪfter downloading the image with docker pull, this is how you start it on Windows 10:ĭocker run -p 8888:8888 -p 4040:4040 -v D:\ sparkMounted:/home/jovyan/work -name spark jupyter/pyspark-notebook You will be using this Spark and Python setup if you are part of my Big Data classes. I will not repeat this here but instead focus on understanding and testing various ways to access and use the stack, assuming that the reader has the basic Docker/Jupyter background.Īlso do check my consulting & training page.

Max describes Jupyter and Docker in greater detail, and that page will be very useful and recommended if you have not used much Docker/Jupyter before. However if this is not sufficient, then the Docker image documentation h ere or else read this useful third party usage commentary by Max Melnick. The summary below is hopefully everything you need to get started with this image. Having tried various preloaded Dockerhub images, I started liking this one: jupyter pyspark/notebook.

My suggestion is for the quickest install is to get a Docker image with everything (Spark, Python, Jupyter) preinstalled. Obviously, will run Spark in a local standalone mode, so you will not be able to run Spark jobs in distributed environment.

#How to install pyspark on windows 10 how to#

To experiment with Spark and Python (PySpark or Jupyter), you need to install both. Here is how to get such an environment on your laptop, and some possible troubleshooting you might need to get through. For those who want to learn Spark with Python (including students of these BigData classes), here’s an intro to the simplest possible setup. It is written in Scala, however you can also interface it from Python. Python RequirementsĪt its core PySpark depends on Py4J, but some additional sub-packages have their own extra requirements for some features (including numpy, pandas, and pyarrow).Apache Spark is the popular distributed computation environment. NOTE: If you are using this with a Spark standalone cluster you must ensure that the version (including minor version) matches or you may experience odd errors.

#How to install pyspark on windows 10 full version#

You can download the full version of Spark from the Apache Spark downloads page.

This Python packaged version of Spark is suitable for interacting with an existing cluster (be it Spark standalone, YARN, or Mesos) - but does not contain the tools required to set up your own standalone Spark cluster. The Python packaging for Spark is not intended to replace all of the other use cases. Using PySpark requires the Spark JARs, and if you are building this from source please see the builder instructions at This packaging is currently experimental and may change in future versions (although we will do our best to keep compatibility). This README file only contains basic information related to pip installed PySpark. Guide, on the project web page Python Packaging You can find the latest Spark documentation, including a programming

MLlib for machine learning, GraphX for graph processing,Īnd Structured Streaming for stream processing. Rich set of higher-level tools including Spark SQL for SQL and DataFrames, Supports general computation graphs for data analysis. High-level APIs in Scala, Java, Python, and R, and an optimized engine that Spark is a unified analytics engine for large-scale data processing.

0 kommentar(er)

0 kommentar(er)